What is it ?

The Acropolis Volumes API exposes back-end NDFS storage to guest operating system, physical hosts, and containers through iSCSI. This allows any operating system to access Nutanix DSF (Distributed Storage Fabric) and leverage it’s storage capabilities. In this deployment the Operating system is talking directly to Nutanix storage layer bypassing the hypervizor.

The following entities compose the volumes API:

- Volume Group : iSCSI target and group of disk devices allowing for centralised management, snapshotting and policy application

- Disks: Storage devices in the Volume Group (seen as LUNs for the iSCSI target)

- Attachment : Allowing a specified initiator IQN access to the volume group

- Create a new Volume Group

- Add disk(s) to Volume Group

- Attach an initiator IQN to the Volume Group

How to use it ?

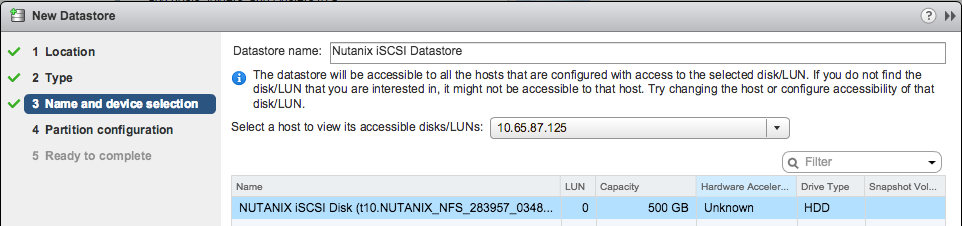

I am going to describe the process of using the Volumes API for provisioning a iSCSI datastore on vSphere. So, lets get down to it , there are other ways in which you can use the Volumes API such as presenting it directly to a bare metal or to a VM.

Steps on Nutanix Acropolis Layer

The first thing that you have to make sure is that your Software iSCSI adapter is enabled and all the required networking (vmkernel) interface is created for the SW iSCSI adaptor. Verify that the iSCSI adapter is now using this new vmkernel interface which we created earlier.

Summary :

As you can see it’s very simple to have Nutanix Acropolis Volume API being used as an iSCSI device on vSphere, there are other uses cases mentioned below where it will be extremely useful.

- Shared Disks

- Oracle RAC , Microsoft Failover Clustering etc

- Disks as first-class entities

- Guest-initiated iSCSI

- Bare Metal consumers

- MS exchange on vSphere using iSCSI

Pingback: Storage Portfolio Vendors want you to believe Silos are good » myvirtualcloud.net

Thanks. Very informative.